Spherical shell in box has unexpected sensitivity to grid size

|

I created a problem consisting of a spherical shell (sigma=0.12 for shell and contained sphere subdomains) inside a box (sigma=0.02). Here are the constriction steps:

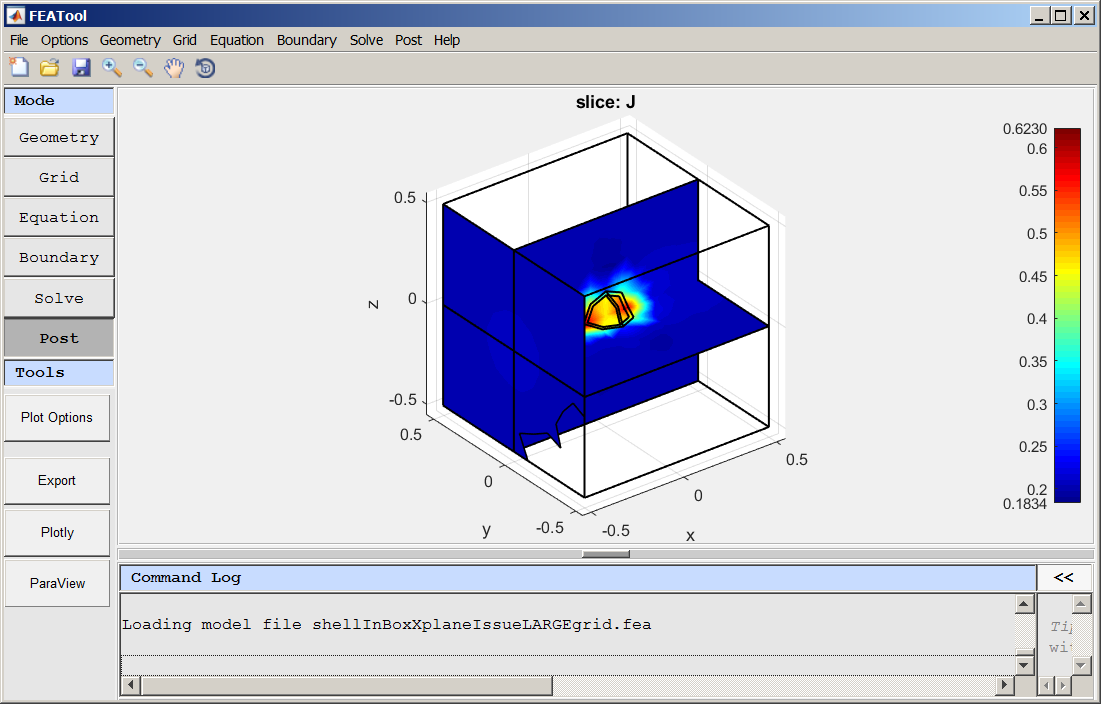

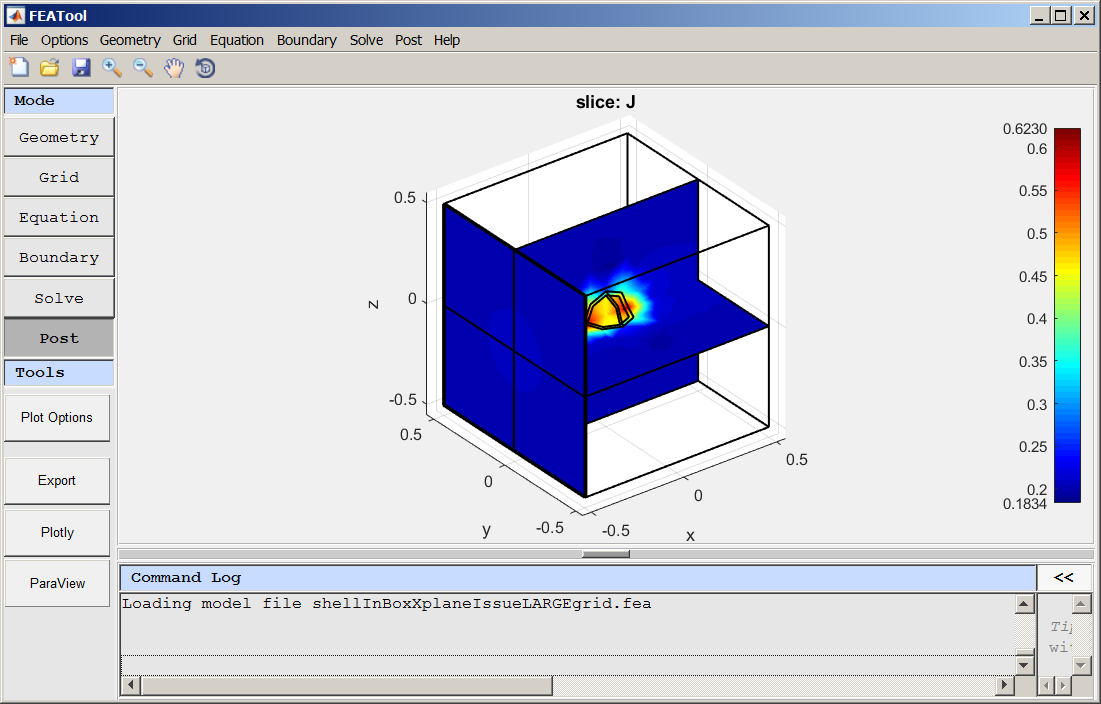

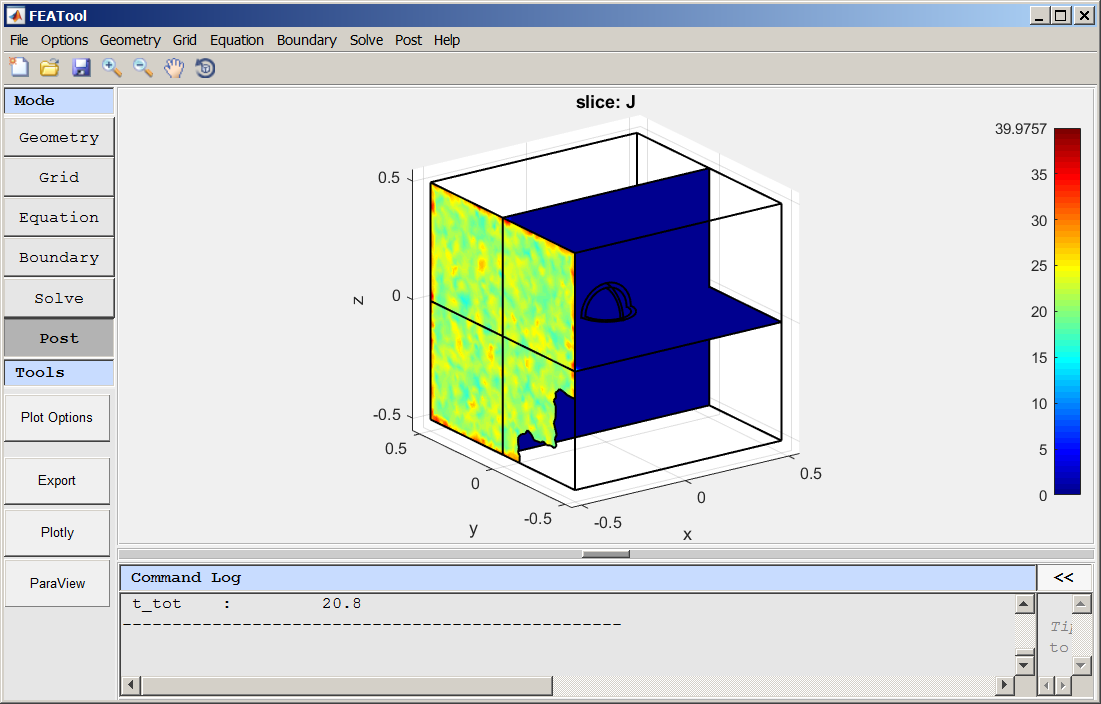

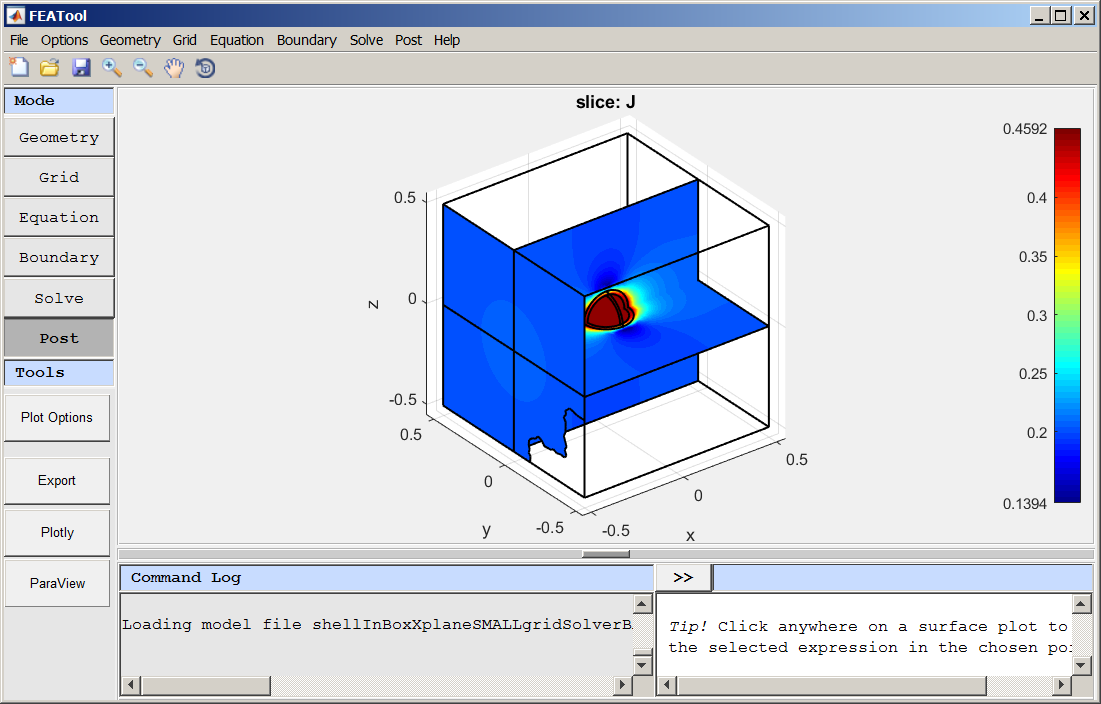

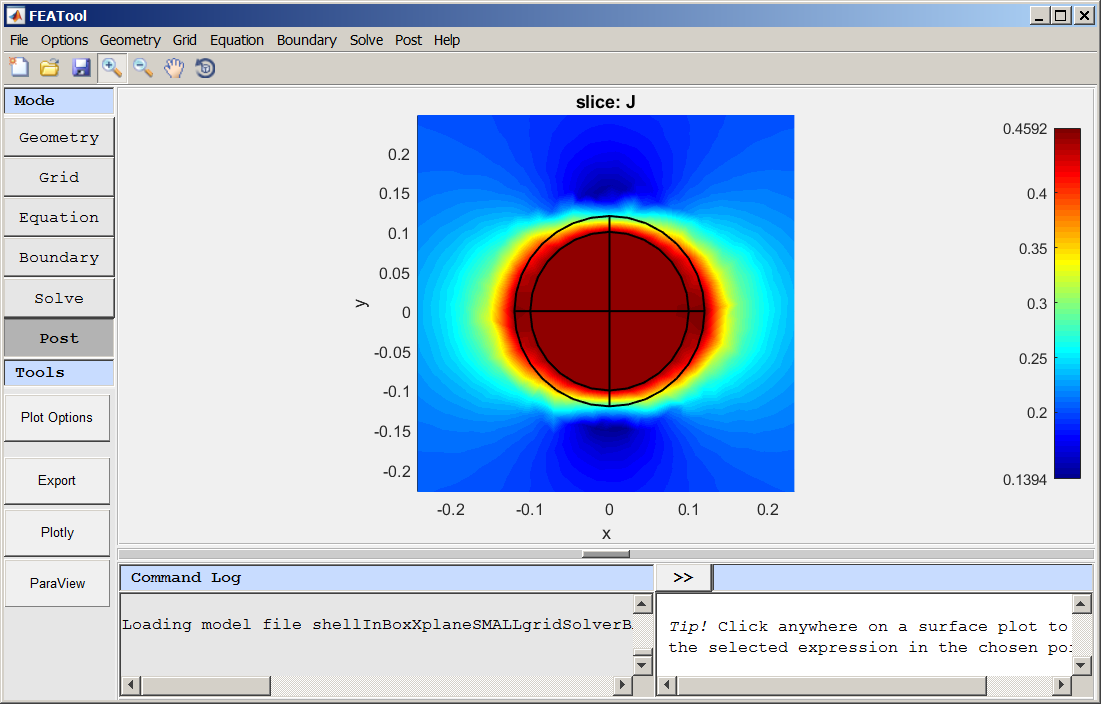

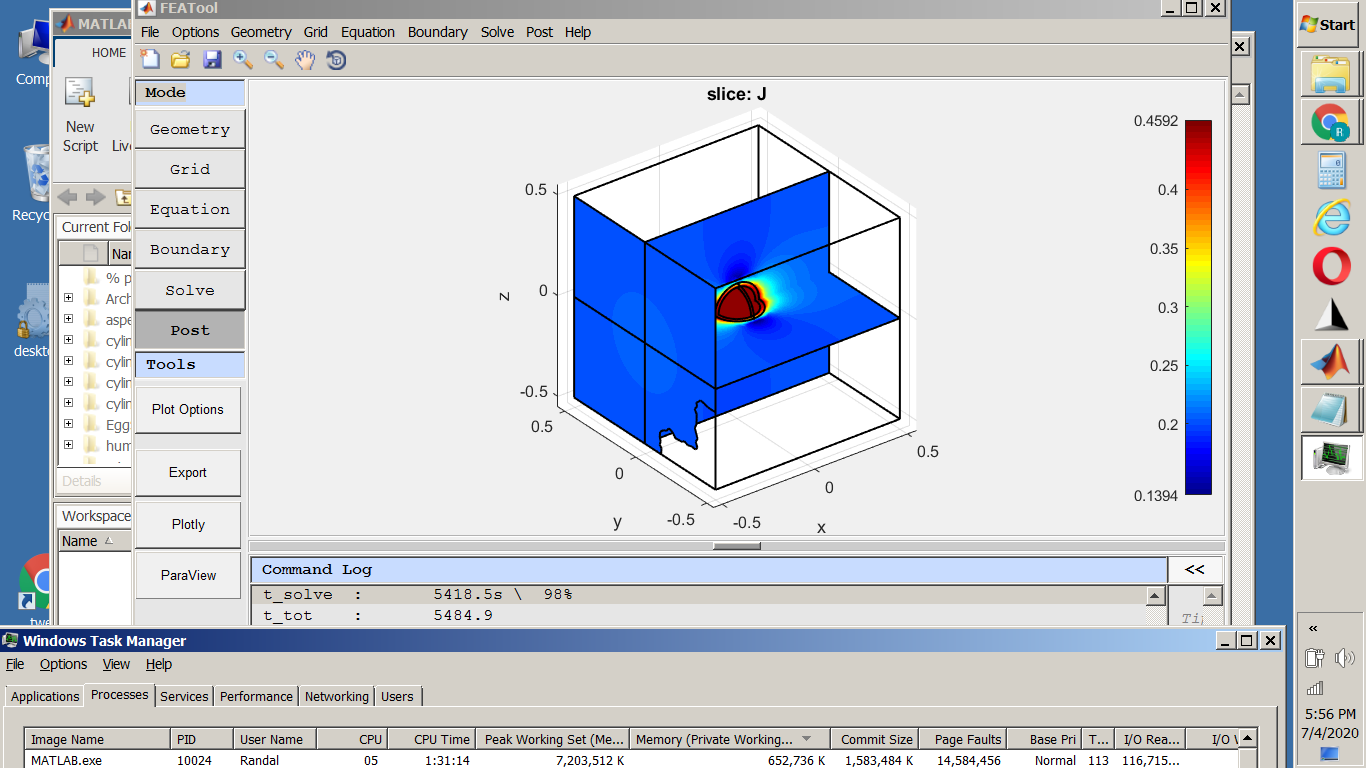

Create 12cm radius sphere, S1 Create 10cm radius sphere, S2 Combine, S1-S2 = CS1 Create Box 1m^3, centered at 0,0,0 Grid size default 0.15 Grid Generate Equation sigma .12, .12, .05 Boundary Internal (1-16) Continuity, 19 V=0, 21 V=10, other external J=0 Solve = This solution looks as expected. The only noted irregularity is a slight misalignment of grid mesh and surface/boundary near (-.5, -.5, 0) Post: 3D Slice Plot of J (current density defined in Constants), [email protected]/Y@0/Z@0 (X slice has grid cell vs. surface artifact)  ... which disappears if the X plane is shifted by 1cm. Post: 3D Slice Plot of J, [email protected]/Y@0/Z@0 (X slice artifact gone)  This form of the problem is saved as: shellInBoxXplaneIssueLARGEgrid.fea Next I changed the grid size to 0.0260293 (likely this was initially populated by FEATool as a refinement at some point) Grid size 0.0260293 Generate Grid Solve =But now the current density is not correct: Post: 3D Slice Plot of J, [email protected]/Y@0/Z@0 (J is very wrong)  ...and the Voltage is mostly dropped across the first 5cm Post: 3D iso Slice Plot of V, (9V droped in 1st 5 cm)  Among other grid sizes that I tried, 0.028853 gives expected results, but 0.028 does not. To reproduce this, you can begin with the saved intermediate problem file included above (shellInBoxXplaneIssueLARGEgrid.fea) and change grid size and solve. As is frequently the case, I am not sure whether this is operator error (me) or some issue/limitation. Kind regards, Randal Note: I did not include the final .fea file that illustrates the issue directly, because it (~14.5Mb) exceeds the 5Mb file size upload limit. |

|

By the way, if this issue will potentially be addressed by the forthcoming geometry engine changes, no reply is expected. I will retest after the next release.

-Randal |

Re: Spherical shell in box has unexpected sensitivity to grid size

|

Administrator

|

In reply to this post by randress

If you just plot the dependent variable, "V", you can see that the returned solution is simply the initial/boundary conditions.

This indicates that most likely the linear solver (default MUMPS) has failed (mostly likely either due to very ill conditioned matrix, or running out of memory), in this case without issuing a warning/or error message. You can try and see if the other linear solvers will work better (although most likely not as efficient as MUMPS). |

|

This post was updated on .

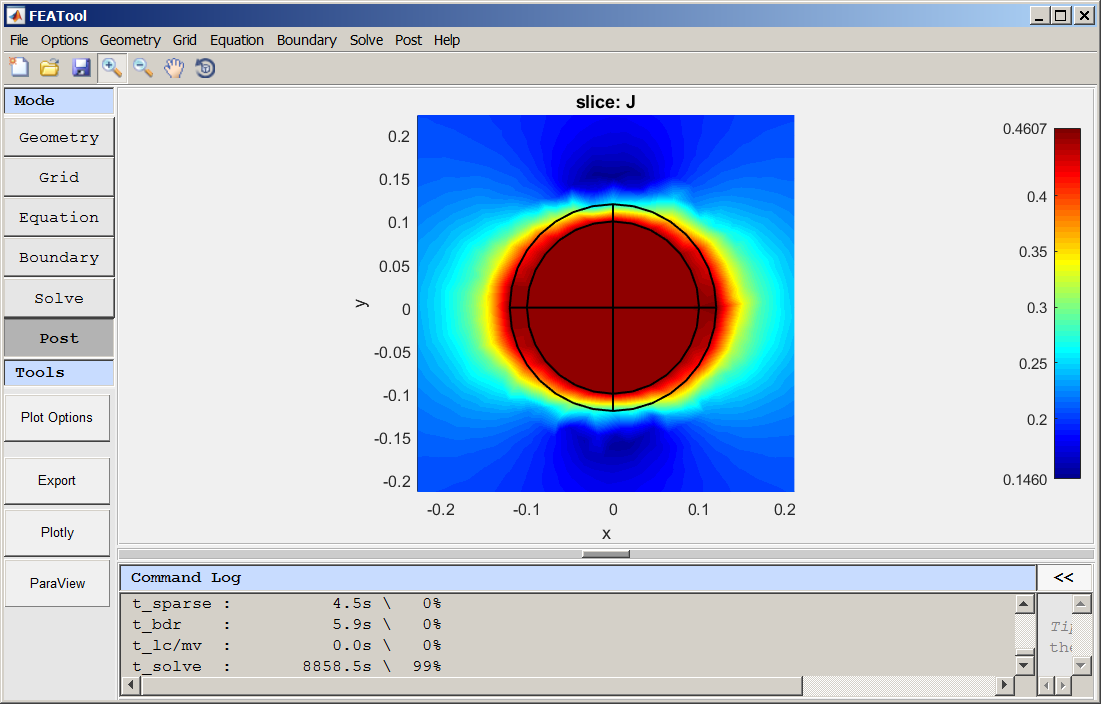

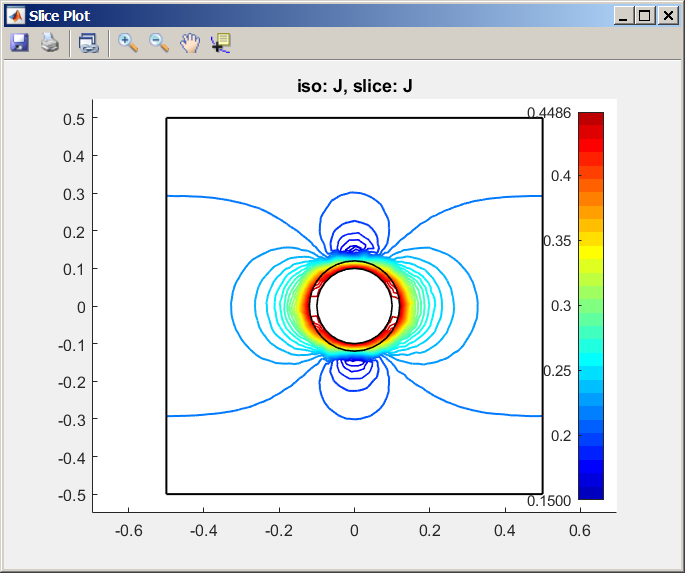

"backlash" solved it and produced a nice solution:   ...and it took ~45 cpu minutes over 2.5 wall clock hours on my laptop (i5 450 M/8Gb ram). The others (gmres/bicgstab) were killed by me after about (100/80) cpu min. and about 5 wallclock hours each. To get a timing on a successful "mumps" run, I changed the grid size to 0.026 and it took about 9000 cpusecs (2.5 cpu hr) and also produced a nice solution:  Thanks for the analysis and suggestion, -Randal |

Re: Spherical shell in box has unexpected sensitivity to grid size

|

Administrator

|

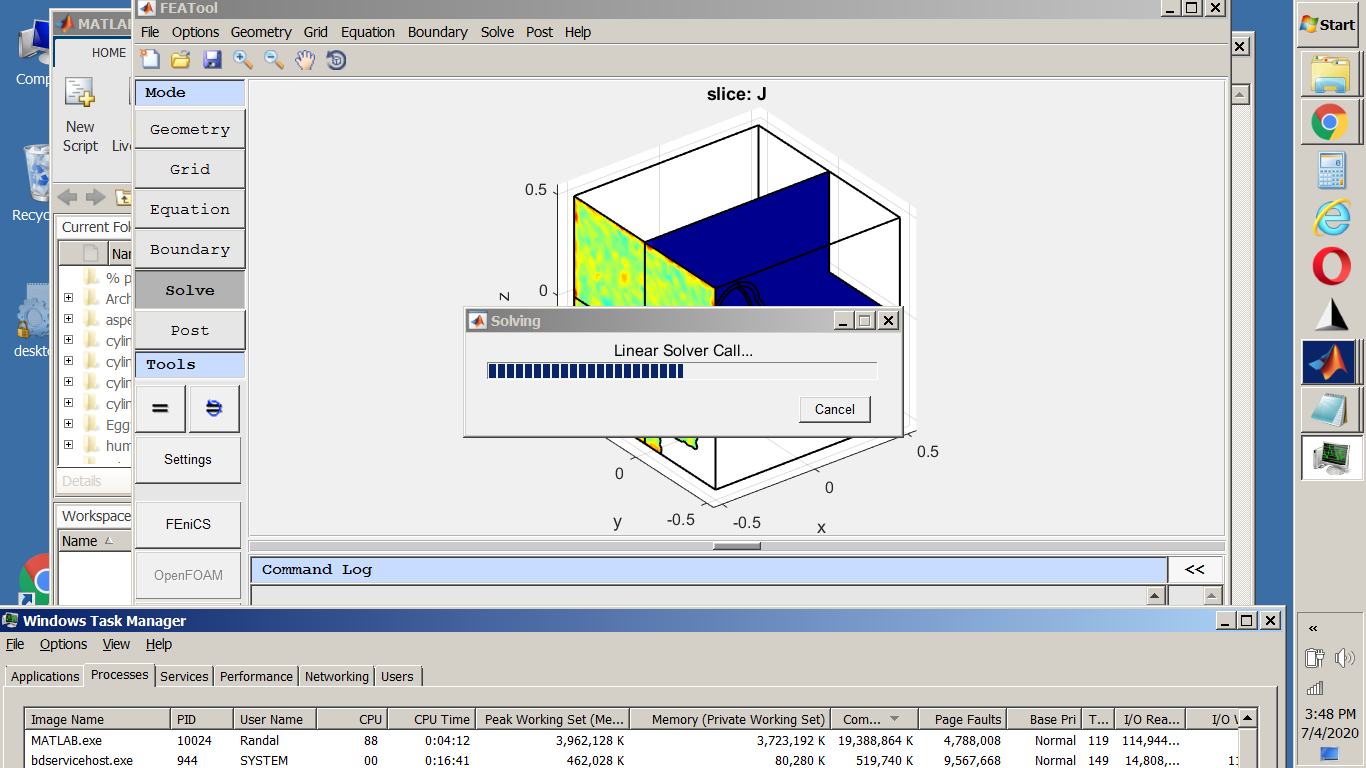

Depending on your system performance, it could be that the linear solver has exceeded available RAM memory and started to use swap/disk space. Although, backslash/UMFPACK built-in to Matlab does this better than MUMPS, it would decrese performance significantly. You can see this if you start the Windows task manager (or top on linux) when you're solving and disk io is increasing while cpu use drops to a few percent for the Matlab process (and memory alloaction is exceeded your available RAM). In you attached model you might consider to go back to using 1st order FEM shape functions unless you really need 2nd order, as they significantly reduce memory usage.

Iterative solvers (gmres/bicgstab) use less memory while taking an increasing number of iterations (time) to converge scaling more than linear with problem size (unless some for of multigrid can be used), so basically use less memory but take increasingly longer to converge. |

|

Thanks for the discussion. I was indeed watching the process behavior with Windows Task Manager and I noticed some of the events you describe - I do recall at various times seeing the cpu use go way down, high page faults and the memory commit size definitely exceeded my installed RAM. I don't recall how high the working set size got, but it likely exceeded 8Gb (my RAM size) also. But I do not recall enough to put a story together of what happened when using which solver :-( But I suspect that, based on your discussion, that had I let them finish that the gmres and bicgstab would have produced good results.

A couple of thoughts at the end of this one: 1) Are there existing ways to observe progress in the linear solvers? If so, experience could teach when to kill a doomed solution and when to let it finish. 2) I am getting closer to upgrading my computer system. At a future time I would like to ask a few questions regarding concurrency (some say parallelism) - to determine core scalability - at what point do more cores become unused by the various modules/programs/processes used by FEATool - including speculation regarding possible future direction with python/Opera. Also I am leaning toward using Linux. Kind Regards, -Randal |

|

A couple of more things on this problem (spherical shell in Box). Please make a separate post(s) if appropriate.

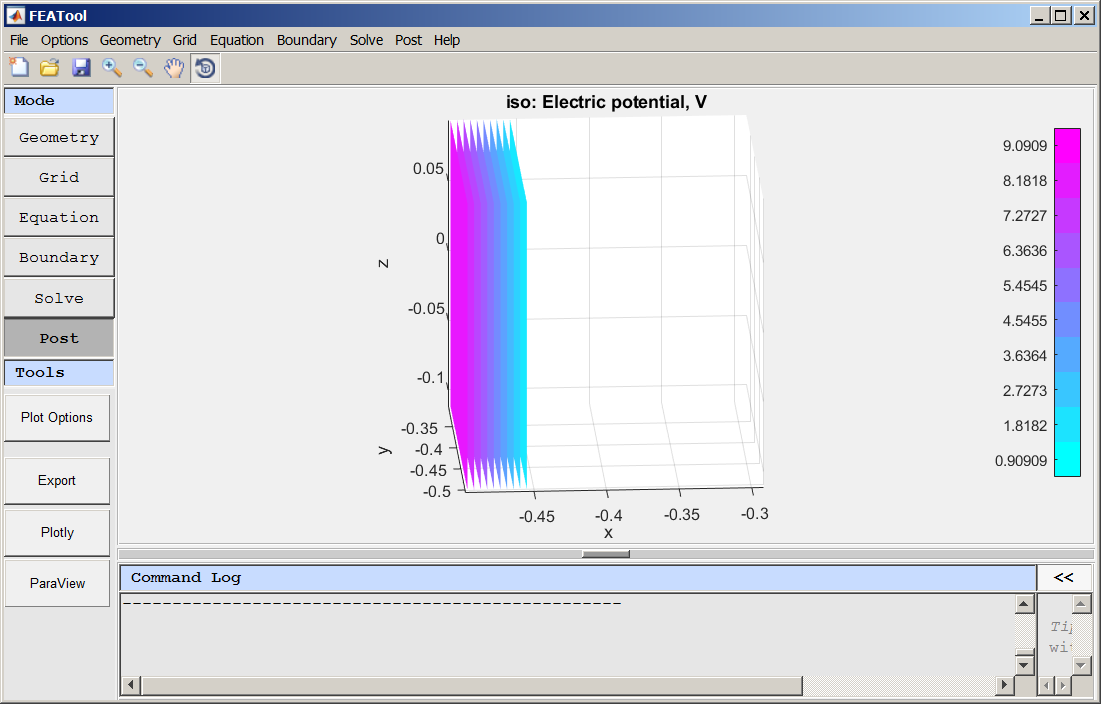

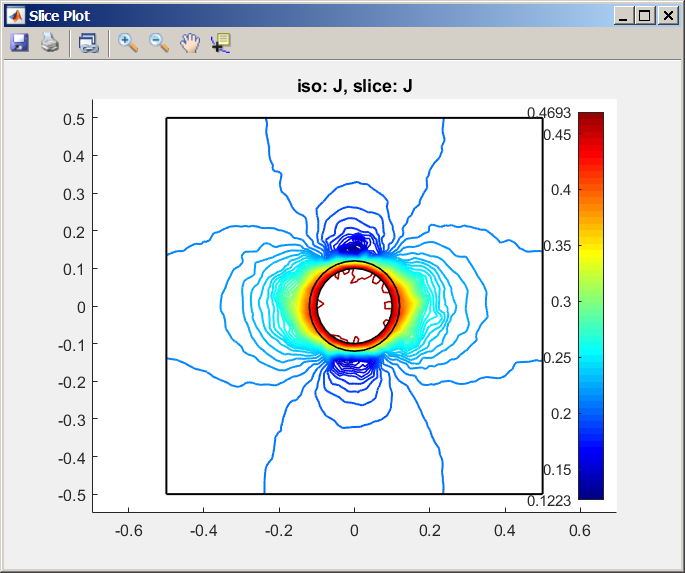

The first is a real puzzler. The answer could simply be "cockpit trouble" - that I did not do what I thought I had dis ... and so my representations in the post were incorrect. But I hope you will consider other explainations before we both reach that conclusion :-) The second is simply a confirmation of what you mentioned (1st vs 2nd order) .... but the contrast is so great that I'd like a sanity check. The first: I cannot reproduce the problem I reported, neither from beginning with the .fea that I attached and following the instructions I gave nor from simply opening and re-solving the several instances of the problem (14Mb file) that I encountered before posting it... +> Were you ever able to reproduce it from my instructions? You can also download it in its final form from http://randress.com/featool/shellInBoxXplaneIssue.fea +> I there any possibility that there is some nondeterministic process in either gmsh or MATLAB/featool? +> Could there have been a silent update by MATLAB that I missed. +> Can you determine anything from this log file. I think it is the only one I have. cmdLogLARGEtoIssue.txt Each time I attempt to reproduce it, it solves correctly in about 6000 seconds or so. Note below the beginning of re-solving the file at randress.com. See the previous erroneous solution plot in the background...  At the end, you see a correct solution:  Before I posted the case I tried to be sure I could reproduce it ... I don't know what happened. On to the second thing... Below are timing results from the solution using 2nd and then 1st order functions. Does it seem reasonable that the 1st order should be 400 times faster (6000 secs vs 15 secs)? I could not believe it. mumps with 0.0260293 2nd order: -------------------------------------------------- Simulation timings -------------------------------------------------- t_asm(A) : 3.5s \ 0% t_asm(f) : 0.0s \ 0% t_sparse : 4.7s \ 0% t_bdr : 5.7s \ 0% t_lc/mv : 0.0s \ 0% t_solve : 5933.3s \ 98% t_tot : 6003.9 -------------------------------------------------- Then changing back to 1st order still with 0.0260293: -------------------------------------------------- Simulation timings -------------------------------------------------- t_asm(A) : 0.8s \ 5% t_asm(f) : 0.0s \ 0% t_sparse : 0.4s \ 2% t_bdr : 0.6s \ 3% t_lc/mv : 0.0s \ 0% t_solve : 12.5s \ 83% t_tot : 15.0 --------------------------------------------------Sure, the iso linear plots are not as smooth, but what a price to pay. 2nd Order:  1st Order:  Again, I just wanted to see of you thought it was reasonable. I have also shown a cutaway of the gird along the x-axis (all with x>0) below  BTW, no rush in responding to this. Kind regards, Randal |

Re: Spherical shell in box has unexpected sensitivity to grid size

|

Administrator

|

This is entirely to be expected if your system runs out of available RAM memory and starts to use swap/disk space instead. |

|

This post was updated on .

After you explained the issue I began paying more attention to the process stats and I am beginning to understand. The wall-clock slow down is not due to increased computation requirements but, as you say, the inefficiency of constant swapping vs using pages from RAM... I am beginning to think about a new computer system to do this work... Kind regards, Randal |

«

Return to Technical Issues

|

1 view|%1 views

| Free forum by Nabble | Edit this page |